In request using a single LRU or FIFO algorithm, an object (or several objects) may be deleted during cache replacement. For example, in FIFO algorithm, the oldest saved object will be deleted earliest and the objects that may be still used are deleted, causing miss and delay. In this condition, LRU algorithm seems to be able to maximize hit rate.

Full Answer

What is the difference between LRU cache and FIFO cache?

Yes, LRU cache is based on least recent use of on object in cache but FIFO is based on the time an object is cached. Yes, that is correct. FIFO means First In, First Out, i.e., consider (in this case delete) elements strictly in arrival order.

Is 8 random better than LRU or FIFO?

– rrazd Aug 3 '11 at 18:07 8 random gives better worst-case performance than LRU. The classic example where random is better than LRU and FIFO is a repeated linear sweep through memory slightly larger than the cache size. In that case, both LRU and FIFO will be pessimal, dropping each entry just before it is needed... – Chris Dodd

When is it better to use LRU or random replacement policy?

Set thrashing: When the “program working set” in a set is larger than set associativity " Random replacement policy is better when thrashing occurs In practice: " Depends on workload " Average hit rate of LRU and Random are similar

Why don't modern processors implement true LRU in highly-associative caches?

Approximations of LRU Most modern processors do not implement “true LRU” (also called “perfect LRU”) in highly-associative caches Why? " True LRU is complex " LRU is an approximation to predict locality anyway (i.e., not the best possible cache management policy) Examples: " Not MRU (not most recently used)

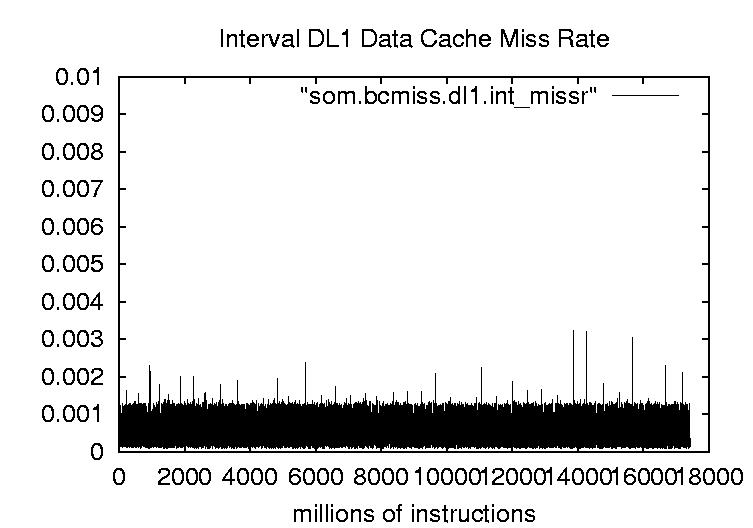

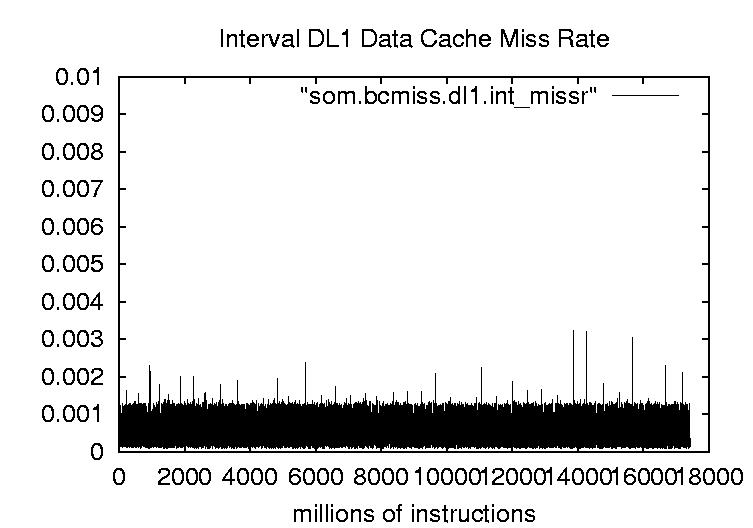

What affects cache miss rate?

The worst cache miss rate occurs when there is no tiling, but the worst CPI occurs with tile size 288 × 288. CPI improves slightly when tiling is discontinued. This is likely due to lower instruction CPI that results from the reduction of executed branch instructions from needing fewer iterations of the tile loops.

Why is LRU better than FIFO for cache replacement?

LRU keeps the things that were most recently used in memory. FIFO keeps the things that were most recently added. LRU is, in general, more efficient, because there are generally memory items that are added once and never used again, and there are items that are added and used frequently.

How is FIFO different from LRU?

FIFO means First In, First Out, i.e., consider (in this case delete) elements strictly in arrival order. LRU is Least Recently Used, the cache element that hasn't been used the longest time is evicted (on the hunch that it won't be needed soon).

How do I reduce cache miss?

How to Reduce Cache Misses (3 Key Tips)Set an Expiry Date for the Cache Lifespan. Every time your cache is purged, the data in it needs to be written into the memory after the first request. ... Increase the Size of Your Cache or Random Access Memory (RAM) ... Use the Optimal Cache Policies for Your Specific Circumstances.

Is LRU better than random?

LRU and 2-random are pretty close, with LRU edging out 2-random for the smaller caches and 2-random edging out LRU for the larger caches. As we might expect, LRU does worse than 2-random when the miss rates are high, and better when the miss rates are low.

Which is better between LRU and optimal page replacement algorithm?

LRU resulted to be the best algorithm for page replacement to implement, but it has some disadvantages. In the used algorithm, LRU maintains a linked list of all pages in the memory, in which, the most recently used page is placed at the front, and the least recently used page is placed at the rear.

Which is best FIFO or LRU?

Sleator and Tarjan proved that the competitive ratio of LRU and FIFO is k . In practice, however, LRU is known to perform much better than FIFO. It is believed that the superiority of LRU can be attributed to locality of reference exhibited in request sequences.

What is FIFO cache algorithm?

In FIFO the item that enter the cache first is evicted first without any regard of how often or how many times it was accessed before. LIFO behaves in exact opposite way - evicts the most recent item from the cache.

What is FIFO cache replacement algorithm?

b) First in First out (FIFO) algorithm: FIFO algorithm replaces the oldest object stored in cache. The algorithm assumes that the oldest objects will not be requested anymore so that the objects may be replaced with the new ones.

How do I increase my cache miss rate?

For small caches, such as the 4 KiB cache, increasing the block size beyond 64 bytes increases the miss rate because of conflicts. For larger caches, increasing the block size beyond 64 bytes does not change the miss rate.

Does the miss rate increase or decrease as the cache size increases?

The textbook shows the miss rates decreasing as the associativity increases. The cache size also has a significant impact on performance. — The larger a cache is, the less chance there will be of a conflict. — Again this means the miss rate decreases, so the AMAT and number of memory stall cycles also decrease.

How do I increase my cache hit rate?

To increase your cache hit ratio, you can configure your origin to add a Cache-Control max-age directive to your objects, and specify the longest practical value for max-age .

Memory Access Traces

As mentioned earlier, the input to the cache simulator is memory traces, which can be generated by executing computer programs. You can use programs such as Valgrind to perform memory profiling and tracing. The trace contains memory addresses accessed during program execution.

Cache Replacement Polies

We have implemented two cache replacement policies i.e. least recently used ( LRU) and First-in first-out ( FIFO) replacement policies.

Input and Output

The program prints out the number of memory reads, memory writes, cache hits, and cache misses in the following format.

Which is better, LRU or FIFO?

Of the three, LRU is generally the best while FIFO is the worst and random comes in somewhere between. You can construct access patterns where any of the three is superior to either of the others, but it is somewhat tricky.

Why is there no caching policy?

No perfect caching policy exists because it would require knowledge of the future (how a program will access memory). But, some are measurably better than others in the common memory access pattern cases. This is the case with LRU. LRU has historically given very good performance in overall use.

Which is better: Large Way or Unknown Code?

Large way's RANDOM is better. Unknown code RANDOM is better for 4 way or above. If it's single function or you want the most speed from something your willing to optimise, and or you don't care about worst case, then LRU is probably what you want.

Is LRU good for x86?

Many of the architectures I have studied use LRU, as it generally provides not only efficiency in implementation, but also is pretty good on average at preventing misses. However, in the latest x86 architectures, I think there are some more complicated things going on. LRU is sort of a basic model.

What is the trend in the design of second level caches?

current trend in the design of second-level caches is anincrease in capacity and associativity. With non-blockingloads and high miss latencies, L2 cache lookup is not astime critical, thus, enabling larger cache associativity.With L2 caches becoming 4-way or 8-way set associative(and maybe even more associative in the future), strategiesthat emphasize the reuse of lines already present in thecache might be more important than those that targetconflict misses. With associative caches and morecorresponding victim choices, it might be time to revisitthe conventional wisdom that cache replacementalgorithms are not much of a performance factor.

How does PRL work?

The PRL algorithm consists of two phases: staticallyclassifying the temporal instructions via profiling andsetting the temporal bit values in the cache at run-time.Online Reference Locality (ORL) follows the samephilosophy, but now the two phases are necessarily closelyinterleaved. Profiling is approximated by keeping a table,created and updated at run-time, of whether an instruction(static PC) exhibits temporal locality or not. An entry inthis table, called the locality table, consists of a single bitrepresenting a locality boolean value. Allocation andupdates of entries are performed according to theinstruction’s reference locality which is based upon theLRU stack depth of the instruction’s previous reference. Inour implementation, we consider that any hit to a non-MRU line indicates the presence of sustained temporallocality. As in the PRL case, the temporal instruction is theone that previously referenced the line with a PC of valueprev_PC. The setting of temporal bits in the cache isperformed as in PRL, except that temporality isdetermined by a table look-up in the locality table ratherthan via temporal or non-temporal instructions. In order to implement ORL, not only do we need thelocality table but also the PC of the instruction thatpreviously referenced the line in the cache (prev_PC)must be recorded with the tag, a field that we call PCtag,for that line in the cache. The PCtag value will be used toupdate values in the locality table. On a L2 access, thecurrent PC (curr_PC) must be sent along with therequested data address. The locality table is similar to abranch history table and the implementation should besimilar.